adb install -t .\nnPerf_v1.0.apk

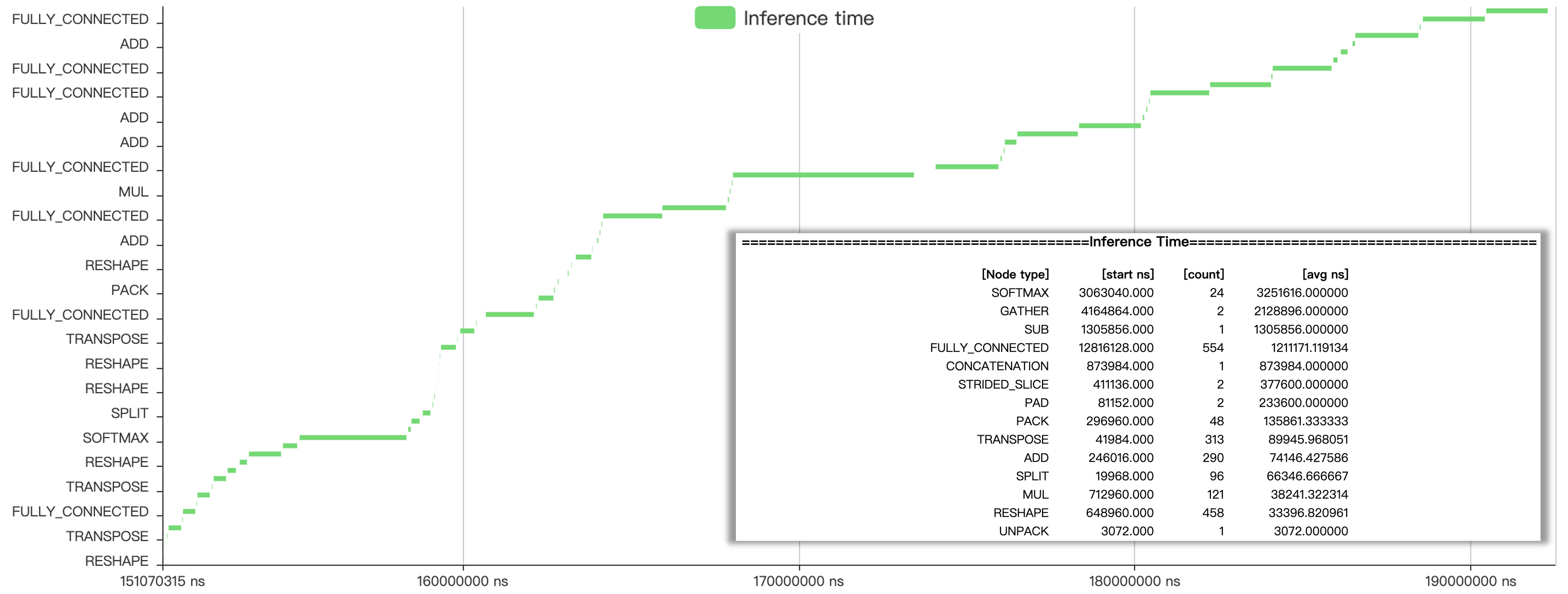

This webpage contains instructions to use our nnPerf. nnPerf is a real-time on-device profiler designed to collect and analyze the DNN model runtime inference latency on mobile platforms. nnPerf demystifies the hidden layers and metrics used for pursuing DNN optimizations and adaptations at the granularity of operators and kernels, ensuring every facet contributing to a DNN model's runtime efficiency is easily accessible to mobile developers via well-defined APIs.

With nnPerf, the mobile developers can easily identify the bottleneck in model run-time efficiency and optimize the model architecture to meet system-level objectives (SLO).

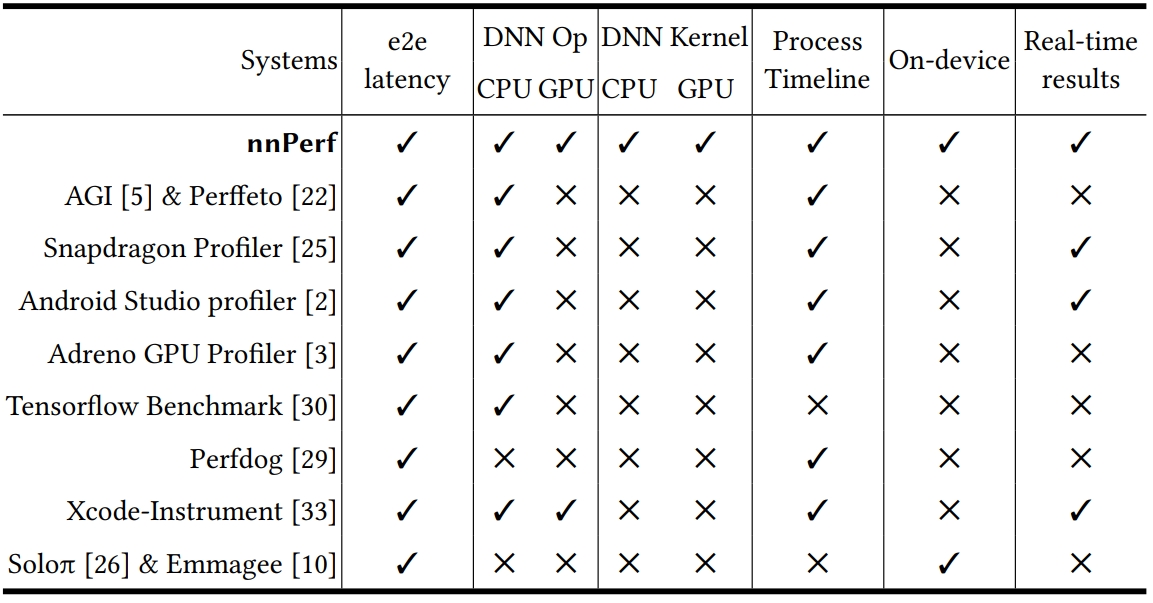

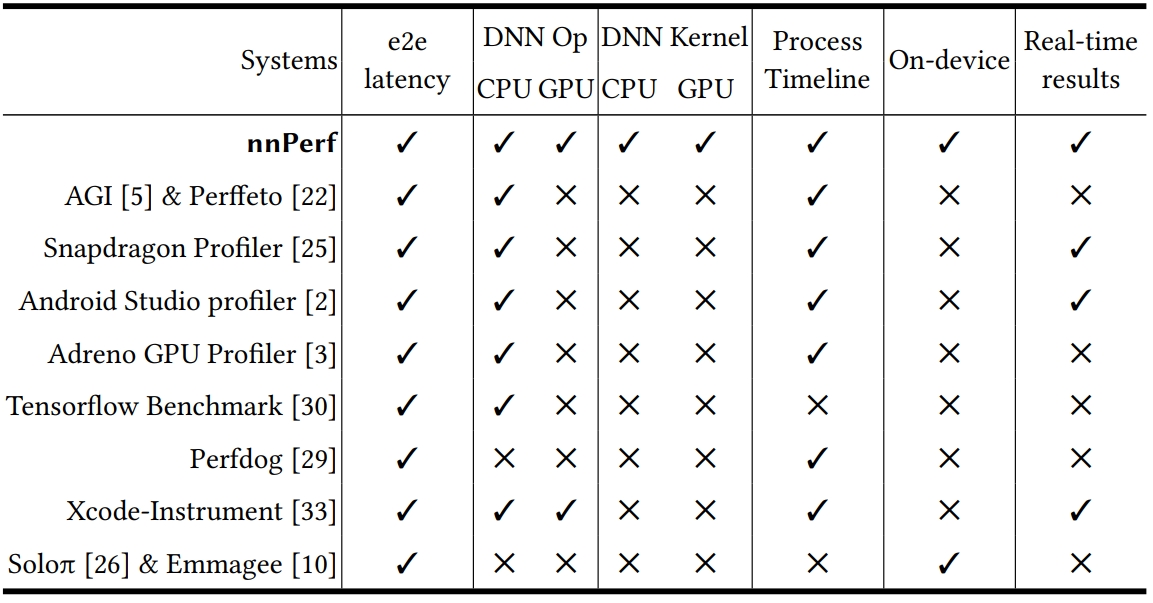

The figure below compares nnPerf to existing DNN model analyzers designed for mobile platforms.

To cite this tool, the best reference is the SenSys 2023 paper.

1. Plug-and-play design principles

• Follows a self-contained approach with no need for extra libraries or complex installations.

2. Real-time on-device profiling

• Monitor DNN inference delays directly on the device without external dependencies like adb.

3. Support measuring fine-grained information at the GPU kernel level

• Allows deep inspection of GPU kernels for detailed insights into DNN model optimization.

For more design details and features, please refer to our Sensys 2023 paper.

Set up nnPerf in just a few steps. You can download the latest version of nnPerf here.

1. Use adb to connect to smartphones or mobile platforms (Android basic system)

2. Install the nnPerf_v1.0.apk

adb install -t .\nnPerf_v1.0.apk

1. Install Android Studio 3.6.3 (Runtime version: 1.8.0_212-release-1586-b04 amd64).

2. Import Project

File -> Open -> Current file directory

3. Android Studio Setting

Android Gradle Plugin Version: 3.1.3 Gradle Version: 4.4 NDK Version: 21.0.6113669 JDK Verison: 1.8.0_211 Complile Sdk Version: 27 Build Tools Version: 27.0.3

4. Run to profile

Output path: /data/data/com.example.android.nnPerf/

5. Model support list (Support for adding other .tflite models)

mobilenetV3-Large-Float mobilenetV3-Small-Float EfficientNet-b0-Float mobilenetV1-Quant mobilenetV2-Float mobilenetV1-Float Squeezenet-Float Densenet-Float MNasNet-1.0 MobileBert SSDMobileV2 Esrgan

We are developing an online timeline visualization tool for nnPerf, we will release it later.

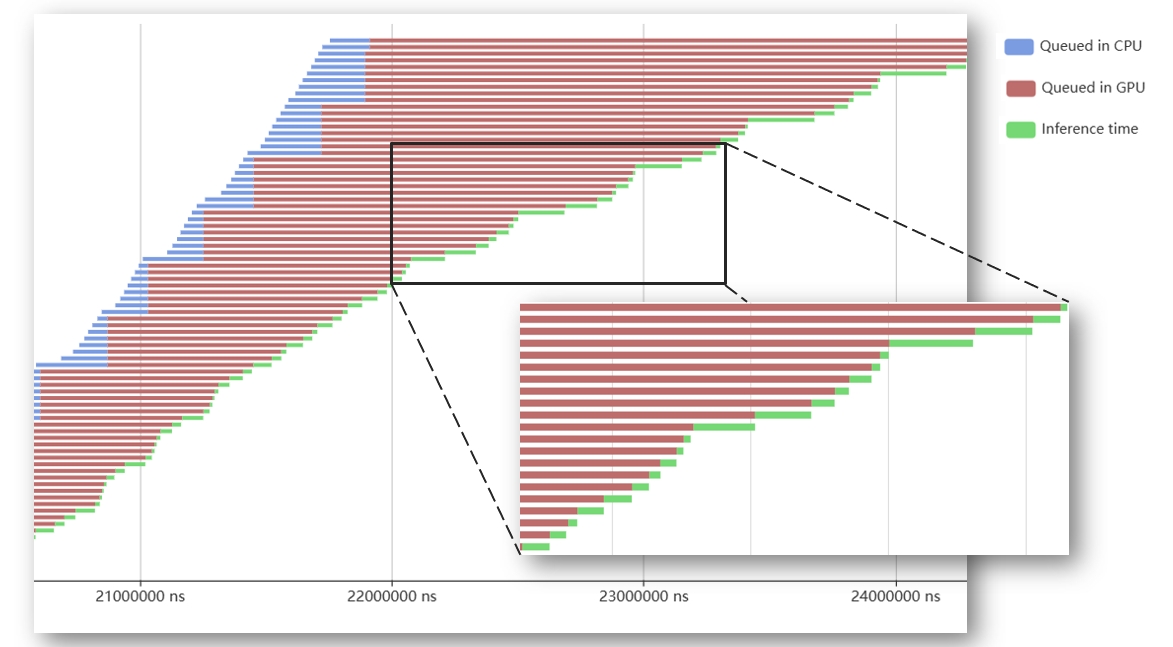

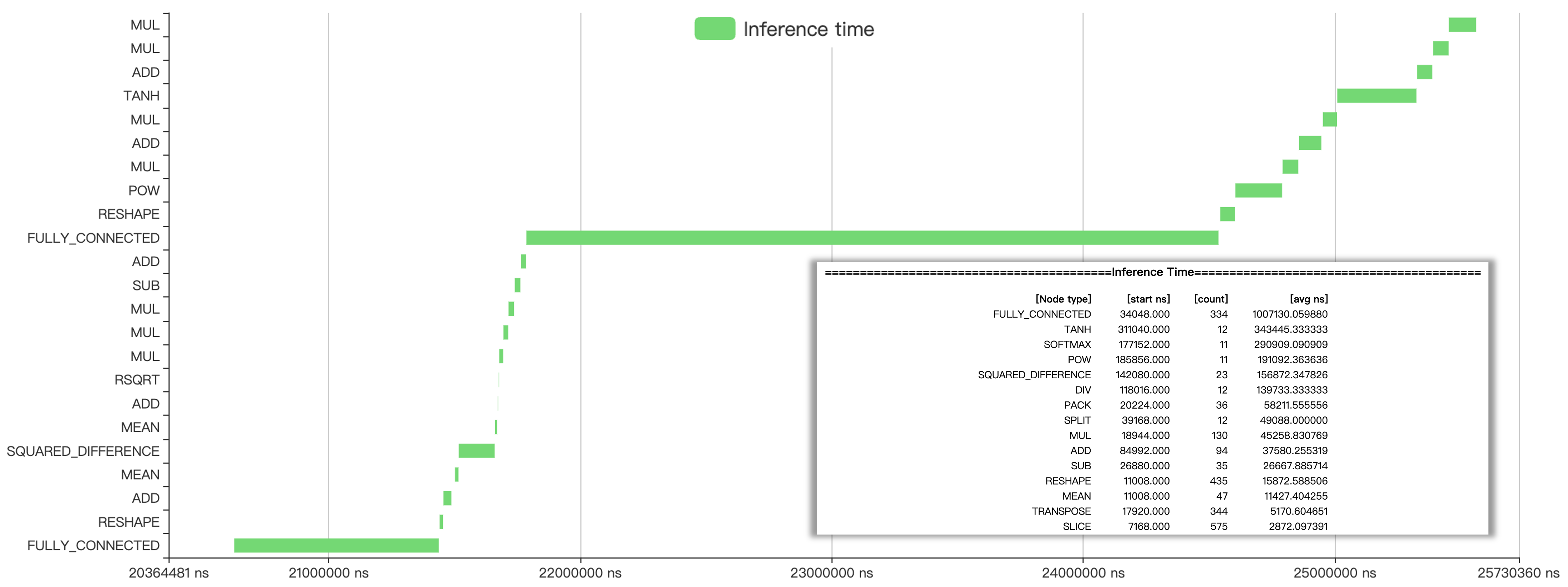

Features of our visualization tools:

1. Easily upload test data files and resize the interface using the scroll wheel.

2. Utilize the "Sort" button to organize data within the file based on three distinctcategories.

3. Retrieve and query previously uploaded files conveniently through the History feature.

4. Selectively hide specific filters through the intuitive legend in the upper right corner of the interface.

We also support profiling LLM, such as GPT, BERT.

If you find nnPerf useful in your research, please consider citing:

@inproceedings{nnPerf,

author = {Chu, Haolin and Zheng, Xiaolong and Liu, Liang and Ma, Huadong},

title = {nnPerf: Demystifying DNN Runtime Inference Latency on Mobile Platforms},

year = {2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://dl.acm.org/doi/10.1145/3625687.3625797},

doi = {10.1145/3625687.3625797},

booktitle = {Proceedings of the 21st ACM Conference on Embedded Networked Sensor Systems},

pages = {125–137},

}

If you have any functional requirements or usage feedback for nnPerf, please send it to this email address: buptwins#163.com (replace # with @).

让您拥有移动端侧神经网络推理的“智子”视角@SenSys’23